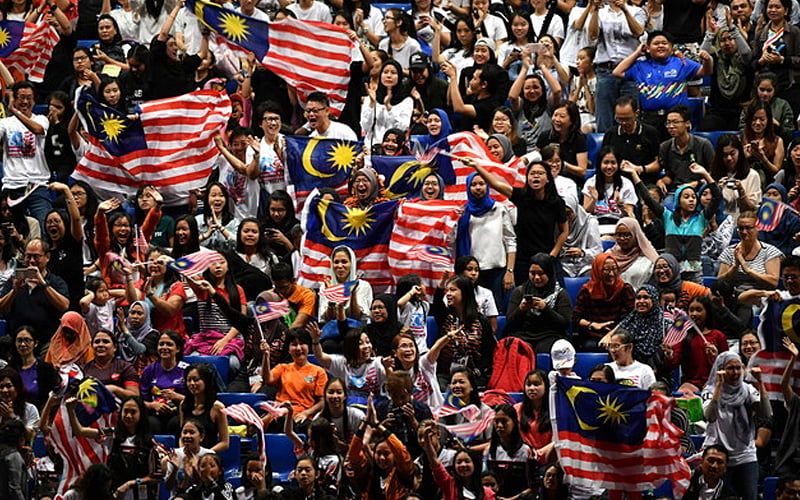

During the 2024 election, America was again bombarded with a record level of election misinformation. New generative AI technology has made it easier and cheaper for bad actors to make ‘cheap fakes.’ This misinformation has real consequences: The Brennan Center for Justice found that “[election misinformation] menaces election officials, with 64 percent reporting in 2022 that the spread of false information has made their jobs more dangerous” and “interferes with voters’ ability to understand and participate in political processes.” Social media platforms are responsible for a large portion of this problem: a study from MIT found that fake news can spread more than 10 times faster than the truth because of the more magnetic headlines offered to articles unconstrained by truth or logic. Social media allows anyone to become a ‘news source.’ The most viral posts are often taken out of context or just plain false – research has found that when users search for the news on TikTok, over 20% of the results contain misinformation and 40% of medical videos on the platform have misleading content.

Social media companies must take a stand and work together to stop this ever-growing problem of misinformation. We can no longer let our elections be manipulated by foreign actors or have our children suffer the consequences of their parents using medical treatments they saw on TikTok.

The solutions to this problem are simpler than one might think. The easiest solution is to have the primary platforms of the misinformation crisis enact stricter misinformation regulations, especially around key dates, like elections or pandemics. Punishment will be doled out to harsher offenders, especially those intentionally spreading malicious content. In addition, any satirical misinformation should be marked, to prevent anyone from mistaking this content for fact. Creators who have uploaded manipulative or malicious misinformation must be punished by having their content removed and their accounts banned. Congress, to halt the misinformation crisis, needs to enact legislation to enforce these changes, remedying a critical problem with the way people get information today.

Beyond the minimum legislative efforts, individual companies should take proactive steps in order to enforce content moderation. In order to ensure the integrity of the content on their platforms, the leaders in social media should dedicate efforts into creating AI bots that can scan posts for misinformation, and set up systems within the platform for viewers to report misinformation, using the collected data as a training set for their bots. This ensures that eventually, it won’t be necessary to rely on any party, allowing the bot to flag a majority of cases and the users to catch all the bot misses.

Misinformation is a serious issue in the media we use today, and developing the technologies to counter it is necessary for the survival of free speech and our democracy.

Sources

Gross, Grant. “Fake News Spreads Fast, But Don’t Blame the Bots.” Internet Society, 21 March 2018, https://www.internetsociety.org/blog/2018/03/fake-news-spread-fast-dont-blame-bots/?gclid=CjwKCAjwq4imBhBQEiwA9Nx1BiZeNqvk2VY8ajY31PG1wtUoZLjfSA4jAxNKYMHG7jcsZaLcdAKOAxoCtHkQAvD_BwE. Accessed 24 October 2024.

Micich, Anastasia. “How misinformation on social media has changed news.” PIRG, 14 August 2023, https://pirg.org/edfund/articles/misinformation-on-social-media/. Accessed 24 October 2024.

Panditharatne, Mekela, and Noah Giansiracusa. “Election Misinformation.” Brennan Center for Justice, https://www.brennancenter.org/election-misinformation. Accessed 24 October 2024.

Snyder, Dan. “Misinformation is a danger in the 2024 election. This group is helping voters recognize it.” CBS News, 6 September 2024, https://www.cbsnews.com/philadelphia/news/how-to-recognize-election-misinformation-danger/. Accessed 24 October 2024.

![Teacher [Milk] Tea: Part 2](https://bisvquill.com/wp-content/uploads/2024/03/Screen-Shot-2024-03-19-at-9.28.48-PM.png)